Debugging the Algorithm Model¶

After an algorithm model is deployed successfully, you can debug the model deployment to verify whether the service runs successfully.

Take the following steps to debug the algorithm model:

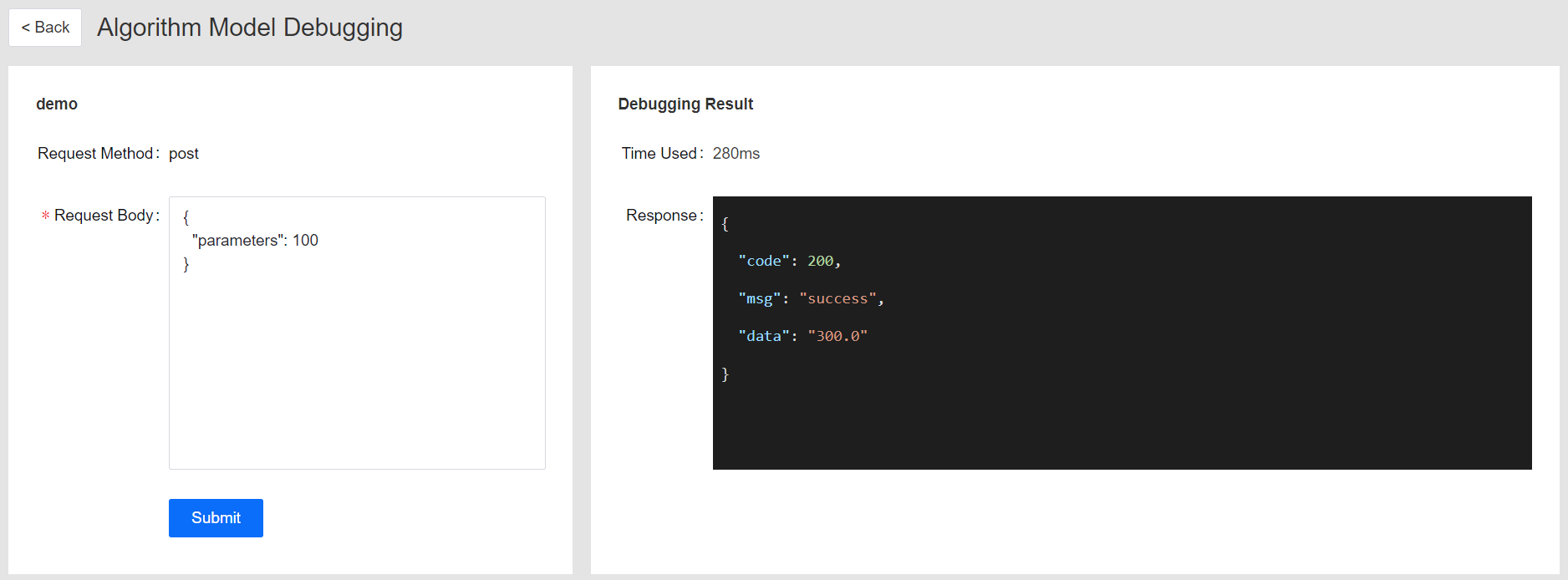

In the Operations column of the deployment list, find the deployment with a status of Success, and click the Debug icon to open the Algorithm Model Debugging page.

In the Request Body input box, enter the request parameters based on the actual function of the algorithm model.

Click Submit to test running the service.

In the Debug Result column, check the time used and response of the service invocation. See the following example:

Next step¶

The service automatically created after the algorithm model deployment is started successfully will be hosted by API Management. Go to EnOS Management Console > API Management > Public API > ml service to view details of the machine learning prediction service API.

If the debugging fails, or the debugging result does not match the actual functionality of the algorithm model, you can update the model source files or the deployment configuration, and then deploy and debug the algorithm model again.